A.H.

How I Replaced My OpenAI Embedding Spend with Open Source Models and Modal

Update 10/29/2023: I wrote this post before I had the chance to set up and try out Hugging Face’s text-embeddings-inference, which turned out to be way faster than my approach below. I’ve left my original post below, unchanged, but figured I’d share the latest.

The long story short is that on a T4 GPU, you can get to ~100K tok/s if you run the code on the GPU itself (to avoid bandwidth latency) or 70K tok/s if you’re sending the batches over the wire. That is about 6x (or 4.5x, respectively) faster than OpenAI!

I’ve been messing around with LLMs and embeddings for a few personal projects, some of which now involve processing reasonably large amounts of text data (tens of gigabytes).

The Motivation

One use case that I have involves generating summaries of a public company’s earnings transcripts. Usually, companies hold earnings calls on a quarterly basis, with calls that last roughly an hour; so, for a company that has been public for 10 years, that’s roughly 40 hours of text. Using OpenAI’s gpt-3.5-turbo and text-embedding-ada-002, wordcel (a Python package I wrote to thread API calls), and llama_index for in-memory vector search, it costs (ballpark) around 40-50 cents per company and takes around 20 minutes to generate the final document with summaries for each earnings transcript.

While that doesn’t sound like a lot, the costs could grow pretty quickly if I wanted to scale up. So far, I haven’t, because I don’t want to be spending hundreds or thousands of dollars a month on inference costs.

On top of that, I can’t run inferences any faster than OpenAI’s 90,000 tokens-per-minute rate limit for gpt-3.5-turbo and 1 million tokens per minute for text-embedding-ada-002.

This had me wondering if the developments in open source language models and wide range of tooling out there was enough for me to come up with an alternative to the OpenAI. The grail (I’ll stop short of calling it “holy” per se) here would be to get something that was faster, cheaper, and as easy to use as OpenAI.

Criteria

To this end, the axes that matter to me are quality, speed, cost, and ease of setup and maintenance.

-

Quality: If the Hugging Face text embeddings leaderboard is to be believed, then many OSS models are already on par with or better than OpenAI’s embedding offering. While I have some doubts about the claims about OSS superiority, model evaluation is outside of the scope of this post, so I’ll happily leave that up to experts smarter than me.

-

Speed: The OSS models available via Hugging Face and SentenceTransformers ranged from 130MB (!!) to several gigabytes in size. While size isn’t a direct indicator of inference speed, it was a good sign that I could get to the inference speeds I want without significant setup time or headache.

-

Cost: The OSS models are theoretically free, but if you want to run them at high throughput then you will need to scale compute, which of course, is not free.

-

Ease of use / maintenance: I don’t have the time nor desire to be managing cloud infrastructure, so I wanted something that could get me up and running with relatively little setup time and a gentle learning curve.

The Solution

I’ll cut to the chase and just tell you what I ended up converging on.

The setup of my problem is that I have a Postgres table with columns date and text (among others), and another table of embeddings of the text in the first table, with columns date, chunked_text, and embedding.

For my model, I ended up using bge-small-en-v1.5, the smallest model on the top 10 of the Hugging Face leaderboard. I had (and still have?) some doubts that such a small model would be as good as OpenAI’s ada, but as of this writing bge-small is in eighth place on the leaderboard and ada in 15th place. Again, I’ll leave it up to the experts to decide which is better.

For cloud infrastructure, Modal ended up being a delightful experience to use. With two to three additional decorators on my Python functions and relatively few and minor changes to the structure of my code, Modal enabled me to deploy and scale my functions across CPU/GPU containers.

I do not plan to publish the code, as it is not really meant for general use and would require some custom setup to get working, but if you would like to see it anyway, feel free to message me.

Anyway, the important parts of the code are below.

My embedding function, and the Modal wrapper function. Note that I specified the cheapest GPU.

import modal

from modal import Image

from sentence_transformers import SentenceTransformer

EMBEDDING_IMAGE = Image.debian_slim().pip_install(

"pandas", "numpy", "python-dotenv",

"langchain", "sentence-transformers",

"psycopg2-binary", "sqlalchemy", "tiktoken"

)

def embed(texts: List[str], model=None) -> List[float]:

"""Embed array of texts."""

assert model is not None, "Must specify a model."

model = SentenceTransformer(model)

embeddings = model.encode(texts)

return embeddings

@stub.function(

image=EMBEDDING_IMAGE,

gpu="T4",

)

def embed_docs(docs: pd.DataFrame):

"""Embed a prepared dataframe of chunks, and add each embedding as another

column."""

print("Embedding...")

embeddings = embed(docs["chunked_text"].tolist(), model=EMBEDDING_MODEL)

embeddings = pd.DataFrame(embeddings)

docs["embedding"] = embeddings.values.tolist()

return docsMy chunking function, and the Modal wrapper function around it.

from langchain.text_splitter import SentenceTransformersTokenTextSplitter

def chunk_text(text, model=None, chunk_overlap=DEFAULT_CHUNK_OVERLAP_LEN) -> List[str]:

"""Split text into chunks."""

assert model is not None, "Must specify a model."

text_splitter = SentenceTransformersTokenTextSplitter(

model_name=model, chunk_overlap=chunk_overlap

)

texts = text_splitter.split_text(text)

return texts

@stub.function(image=EMBEDDING_IMAGE)

def chunk_docs(docs: List[str]):

"""Chunk a list of documents."""

chunked_docs = [chunk_text(doc, model=EMBEDDING_MODEL) for doc in docs]

return chunked_docsAnd then the main subroutine which functioned as the entrypoint to Modal.

@stub.local_entrypoint()

def main(src_table, dst_table, column, start_date, end_date, local=False):

"""Embed a column in the table."""

# Some assertions and validations around the arguments.

...

# Getting the diff between the `src_table` of text and the `dst_table`

# of embeddings.

...

unembedded_rows = pd.concat(unembedded_rows)

# Chunking step.

print("Chunking...")

chunk_batches = [

batch[1][column].tolist()

for batch in unembedded_rows.groupby(unembedded_rows.index // CHUNK_BATCH_SIZE)

]

chunked_text = []

for result in chunk_docs.map(chunk_batches):

chunked_text.extend(result)

flattened: pd.DataFrame = flatten_chunked_text(chunked_text)

# Create equal length subsections of the dataframe.

batches = [batch[1] for batch in flattened.groupby(flattened.index // GPU_LIMIT)])

# Embedding step.

print(f"Embedding {len(batches)} batches...")

print(set([len(batch) for batch in batches]))

embedded = []

for embeddings in embed_docs.map(batches):

# Join the embeddings df with the original dataframe by the index of the

# original df and the `doc_idx` column of the embeddings df, in order to

# get the `id` column of the original df.

embeddings = embeddings.join(unembedded_rows, on="doc_idx")

embeddings = embeddings[["id", "date", "chunked_text", "embedding"]]

embedded.append(embeddings)

print(f"Embedded {len(batches)} batches.")

for embeddings in embedded:

_upload_df_to_table(embeddings, dst_table)The Results

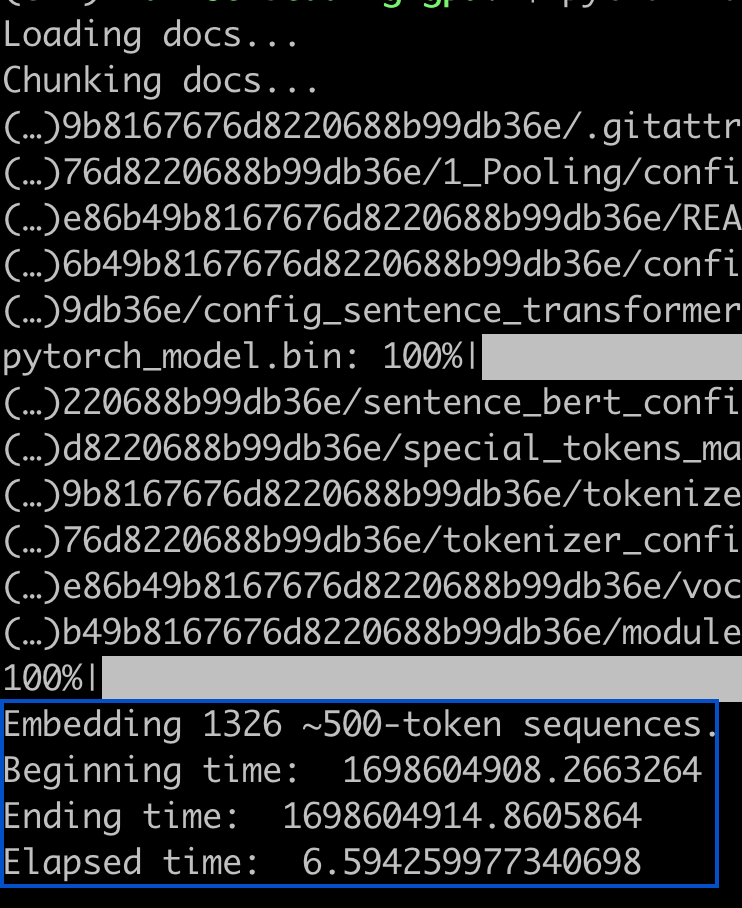

I tested out the above code on around 2 million tokens of data.

2 million tokens would cost around 20 cents ($0.0001 per 1,000 tokens) with OpenAI, and take around 2 minutes to embed with rate limits. With the above system and bge-small, it took around half that time, and cost roughly 25 cents wth Modal. (though around 5 cents was spent on CPUs for chunking). So it was roughly comparable on cost and about twice as fast.

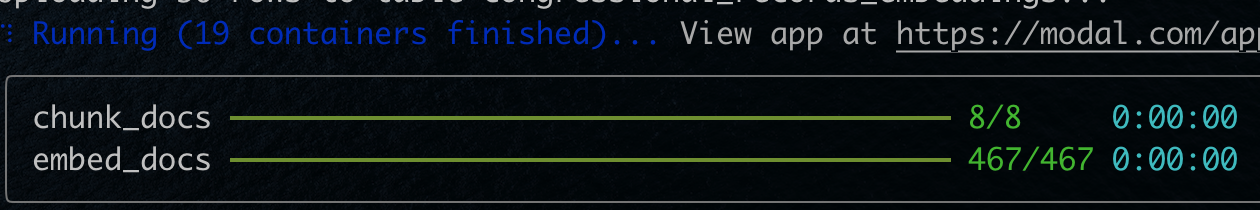

As far as ease of maintenance and developer experience: Modal makes it really easy to track how your jobs are doing. For example, below is a screenshot of the two functions represented above, split across 19 containers (9 CPUs, followed by 10 GPUs).

Bloopers

Other things I tried included:

- Other models, like

mpnet-baseandbge-base, which are both around 400 megabytes. With these models, it took 2-3 minutes and cost anywhere from 40 cents to a few dollars, depending on the GPUs used. - A bunch of different GPU options from Modal. I think sticking with the cheapest (T4) ended up being best for cost, without sacrificing very much speed.

- Hugging Face Text Embeddings Inference endpoint is a convenient Docker image that — if I’m not misreading — can provide 6.5 million token / second inference (400 reqs / second x 32 batch size / req x 512 tokens per batch), which is 400x faster than either the above or OpenAI. It should have been fairly easy to set up (running something like

docker run --gpus all -p 8080:80 -v $volume:/data --pull always ghcr.io/huggingface/text-embeddings-inference:0.2.2 --model-id $model --revision $revision) and thenPOSTing to an endpoint. But, as it turns out, getting GPUs today is kind of difficult, and the nightmare of getting CUDA versions to work was too annoying. On top of that, serverless GPUs today weren’t particularly easy to get set up. But, if I could get this working, it could be a solution that is orders of magnitude cheaper and faster than OpenAI.

Conclusion

Embeddings aren’t that expensive. At $0.0001 per 1K tokens from OpenAI, it was arguably not worth the effort to set up a separate inference pipeline. But, I do think this represents a fairly important moment for open source AI, where an average engineer can self-serve to get to cost and speed parity with OpenAI, relatively easily.